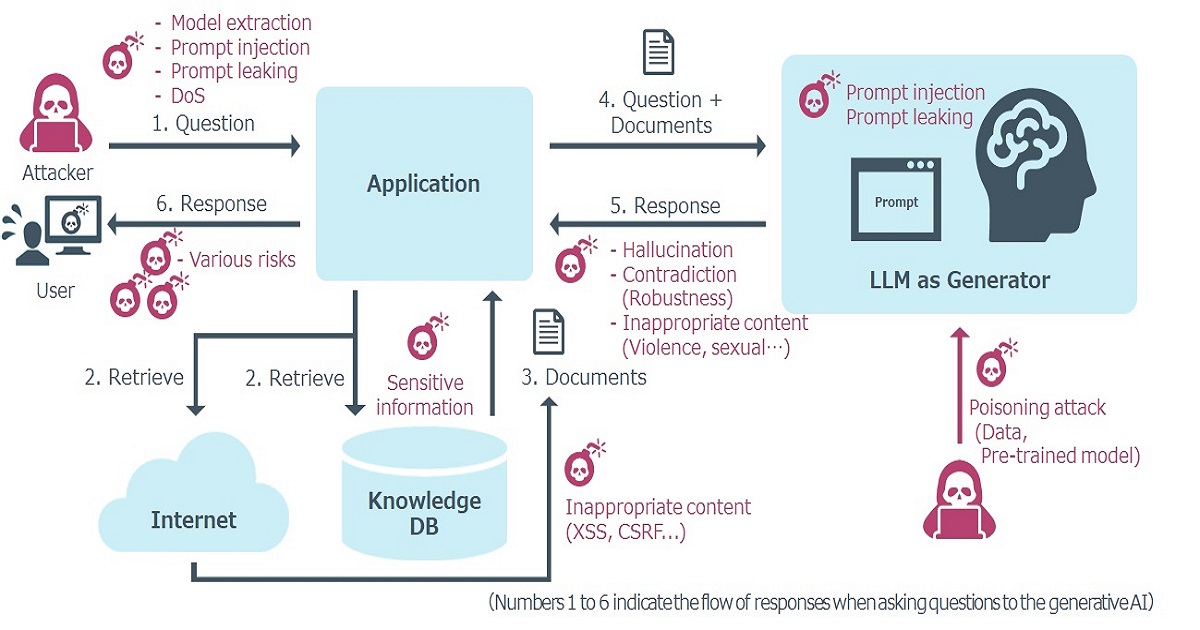

The use of generative AI, especially Large Language Models, has continued to grow in many fields. While expectations for LLMs have increased, LLMs have also highlighted the existence of vulnerabilities, such as prompt injection and prompt leaking, as well as hallucination, sensitive information disclosure, inappropriate content generation and bias risk.

Prompt injection refers to an attempt by an attacker to manipulate input prompts to obtain unexpected or inappropriate information from a model. Prompt leaking is an attempt by an attacker to manipulate input prompts to steal directives or sensitive information originally set in the LLM. Hallucination is a phenomenon in which AI generates information that is not based on facts. Bias risk refers to bias in training data or algorithm design that causes biased judgments or predictions.

Companies utilizing LLM technologies need to be aware of these issues specific to generative AI and apply appropriate countermeasures. For this reason, the importance of security assessment specific to generative AI is now being called for, and various countries are beginning to mention the need for assessment by independent outside experts.

Stepping up to the plate is NRI SecureTechnologies, a global provider of cybersecurity services, with its new security assessment service, "AI Red Team," targeting systems and services using generative AI.

The service recognizes that AI vulnerabilities extend beyond the LLM itself. Peripheral functions and system integration play a crucial role in overall security. To address this, "AI Red Team" implements a two-stage approach.

The first is identifying internal LLM risks. Proprietary automated tests powered by DAST for LLM efficiently detect vulnerabilities. Dynamic Application Security (News - Alert) Testing, or DAST, is a technique for testing running applications and dynamically assessing potential security vulnerabilities. Then, expert engineers conduct manual assessments to uncover use-case-specific issues and delve deeper into identified vulnerabilities.

The second stage is evaluating system-wide risk. Recognizing the probabilistic nature of LLM outputs and limitations of partial evaluations, the service assesses the entire system to determine if AI-driven vulnerabilities pose actual risks. Support for "OWASP Top10 for LLM" ensures comprehensive coverage beyond AI-specific problems.

"AI Red Team" doesn't stop at identification, though. If vulnerabilities are found, the service evaluates the actual system-wide risk and proposes alternative countermeasures. This can potentially avoid addressing complex vulnerabilities within the LLM itself, reducing mitigation costs.

With "AI Red Team," NRI Secure lets organizations confidently navigate the evolving security landscape of generative AI.

NRI Secure is developing the "AI Blue Team" service to support continuous security measures for generative AI, which will be a counterpart to this service and will conduct regular monitoring of AI applications. The service is scheduled to launch in April 2024.

NRI Secure will continue to contribute to the realization of a safe and secure information system environment and society by providing a variety of products and services that support information security measures of companies and organizations.

Edited by Alex Passett